Odins Fist, on 29 November 2014 - 04:54 PM, said:

Odins Fist, on 29 November 2014 - 04:54 PM, said:

Stil stand behind the 3.2 GHZ on a Phenom II chip isn't the greatest for MWO.

When I ran my Phenom II x6 1100t a Stock clocks I absolutely saw a difference between 3.3 and 4.2 GHZ.

On a newer Intel chip 3.2 GHZ is no problem.

You are absolutely correct about Phenom II being a loser in this game of mwo cpus. I have overclocked my stock 3.2GHz Phenom 6 core Thuban 1090T processor to 3.4 and used it awhile but then just yesterday, due to some people posting here about their cpu oc successes, I overclocked it further to 3.6 (seems it is the max it would go without serious water cooling) and I am now getting barely playable (but not the smoothest) framerates--got solid minimum 10 fps increase from just 200MHz overclocking of the cpu. hmm.. I tend to agree with that guy who posted that 4.5 GHz is the minimum for this game (he also has the same processor and got his 'smooth' 60fps in battles by going to 4.5GHz). I think that Phenom in particular is terrible for mwo--don't get me wrong, other processors have different architectures but Phenoms are the forerunners of todays AMD architecture, the first in major redesign, so to speak. Phenoms don't have logical 'hyper-threading'--it's 1 actual per core... HOW exactly they handle the math of mwo calculations I don't know but it looks like they are horrible at mwo performance per se. Old motherboard might add to the problem too, I would guess.

PV Phoenix, on 29 November 2014 - 09:27 PM, said:

PV Phoenix, on 29 November 2014 - 09:27 PM, said:

Far as I'm aware the only big defect I'm aware of with 970's is that a lot of them are shipping with coil whine but still run great. I got lucky as mine doesn't whine and max's out everything. Think I read somewhere that EVGA's cheapest model has some issues though so if you have one of those it might be worth your time to look it up.

But for the most part, a GTX 970 is great for gaming in general, but not so by itself for unoptimized games like MWO. Indeed getting a new CPU is your main option for increasing performance (or overclocking if you can, this helps lots if you can do it)... Or PGI decides to optimize the game. The former is your best best though, latter will probably never happen.

Yeah, I've read about the coil whine. I am glad that I only got 3 one second squeaks of it and it was gone and mine overclocks fine to 1500/7900. It seems the Cryengine and older CPUs are to blame for most of the trouble. Problem is.. that some of the blame is with the newer GTX 970 though.. I WAS getting BETTER solid IN battle frame rates with overlocked GTX 465 which would stay at SOLID above 50 fps with the SAME setup and which would not dip into 40s at all during battles (on low settings everything also) but this new GTX 970 is not optimized for the Cryengine (comparing it to my experience with GTX 465) and the frame rates are wild--they go from 40s to 70s @IN BATTLE experience... really fluctuates. I am not too happy about it.. this is just minimum bare playable experience right now with my Phenom x6 1090T overclocked @3.6GHz.

Flapdrol, on 30 November 2014 - 08:44 AM, said:

Flapdrol, on 30 November 2014 - 08:44 AM, said:

Furmark is a gpu stress tool right? If you're overclocking the cpu you should test with a cpu stress tool, like prime95.

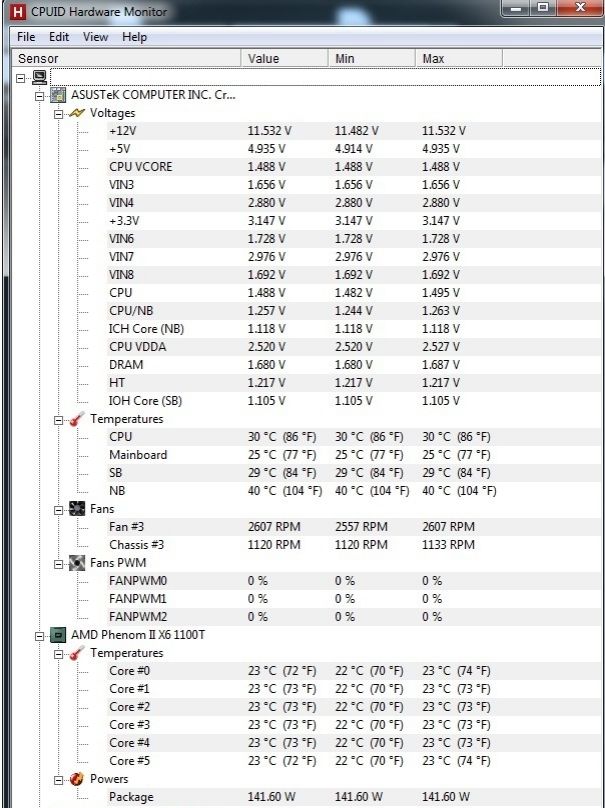

Make sure you keep an eye on the temperatures with something like realtemp or coretemp, if you up the voltage temps go up a lot, and phenom II's don't like heat.

should be plenty of information on how to best overclock phenom II's online, I'd look around a bit.

Well, that's true, but, they have a button there that brings up CPU stress tool up, so one can run both CPU and GPU stress tests at the same time--which is what I did. I put 5 core max burn in for cpu (leaving 1 core to do the video things if needed be) and also turned on graphical burnin test of the furmark. I think you can download the furmark cpu burnin test as a separate download though, but in my case it came together as one program (latest furmark 1.15.0.0 download from Geeks3D.com).

Catamount, on 30 November 2014 - 10:00 AM, said:

Catamount, on 30 November 2014 - 10:00 AM, said:

These days I just go all-in-one with OCCT for CPU testing. It has two tests, something seemingly analogous to prime95 and a Linpack test, a built in temperature monitor, and an adjustable temperature cutoff.

Thanks for the info. I am going to download and try that. Sounds like a good program.

http://www.ocbase.com/

xWiredx, on 30 November 2014 - 02:06 PM, said:

xWiredx, on 30 November 2014 - 02:06 PM, said:

MWO loves CPU cycles. I don't know how many times this has been said, but it's in almost every single thread in the hardware section multiple times. While that Phenom II chip does perform somewhat well, keep in mind that it is about 4 1/2 years old and is bound to show its age a bit in games like MWO that demand top-notch CPU performance in order to have all the bells and whistles turned on.

Yes, it's a very 'old' processor by MWO standards--I came to realize that in the last couple of days. Before that I thought it was still great but seeing how another Cryengine based game Skyrim with high resolution textures (absolutely everything maxed out) drops to 40s and 50s sometimes from solid 60fps.. makes me wonder. I think it's wrong that neither Cryengine nor MWO programmers came out with a simple offload of calculations or optimization of calculations for the either Cryenine itself or for MWO in particular. This programs (Cryengine and MWO) have been for a long time on the market and the 'recommended' PC specifications which are stated for it are ridiculous in light of my experience. All they have to do is to tweak the whatever affects the load during the battle and redirect it to GPU processing (my GPU is running at 30% usage when running MWO! LOL LOL LOL and not all CPU cores are fully utilized either!) or to optimize the code to run more efficiently on, specifically Phenom II processors--this would ensure all the other processors are covered, because it seems that Phenom II cpus are specifically affected by this menace. Oh, and I reiterate that when I get 40sh fps in MWO I get them with EVERY setting on low (both DirectX 9 and DirectX 11--same ugly results) and this only affects IN BATTLE experience (otherwise it's really fast @ over 100fps) and you can see the same slowdown when going between "Skills" and "Mechlab" tabs--the fps would drop suddenly and THAT is what you would be getting in the actual battle with other people (not solo "Testing Grounds" maps. I get over 100 fps in Testing grounds maps--no matter what I do but as soon I am "on" in the battle the frame rate drops from 90 to 40sh fps... fast enough for LRMing but not for person to person quick combat.

Edited by Jesus DIED for me, 01 December 2014 - 08:41 AM.

Jesus DIED for me, on 29 November 2014 - 04:58 PM, said:

Jesus DIED for me, on 29 November 2014 - 04:58 PM, said: