I want too, but im not prepared with the back up funds

Edited by DV McKenna, 24 December 2012 - 01:24 AM.

Posted 24 December 2012 - 01:24 AM

Edited by DV McKenna, 24 December 2012 - 01:24 AM.

Posted 24 December 2012 - 09:02 PM

DV McKenna, on 24 December 2012 - 01:24 AM, said:

DV McKenna, on 24 December 2012 - 01:24 AM, said:

Posted 24 December 2012 - 09:33 PM

Posted 25 December 2012 - 03:00 AM

Posted 25 December 2012 - 03:10 PM

Posted 25 December 2012 - 08:51 PM

Sen, on 25 December 2012 - 03:10 PM, said:

Sen, on 25 December 2012 - 03:10 PM, said:

Posted 26 December 2012 - 07:11 AM

Edited by Sen, 26 December 2012 - 07:12 AM.

Posted 26 December 2012 - 08:21 PM

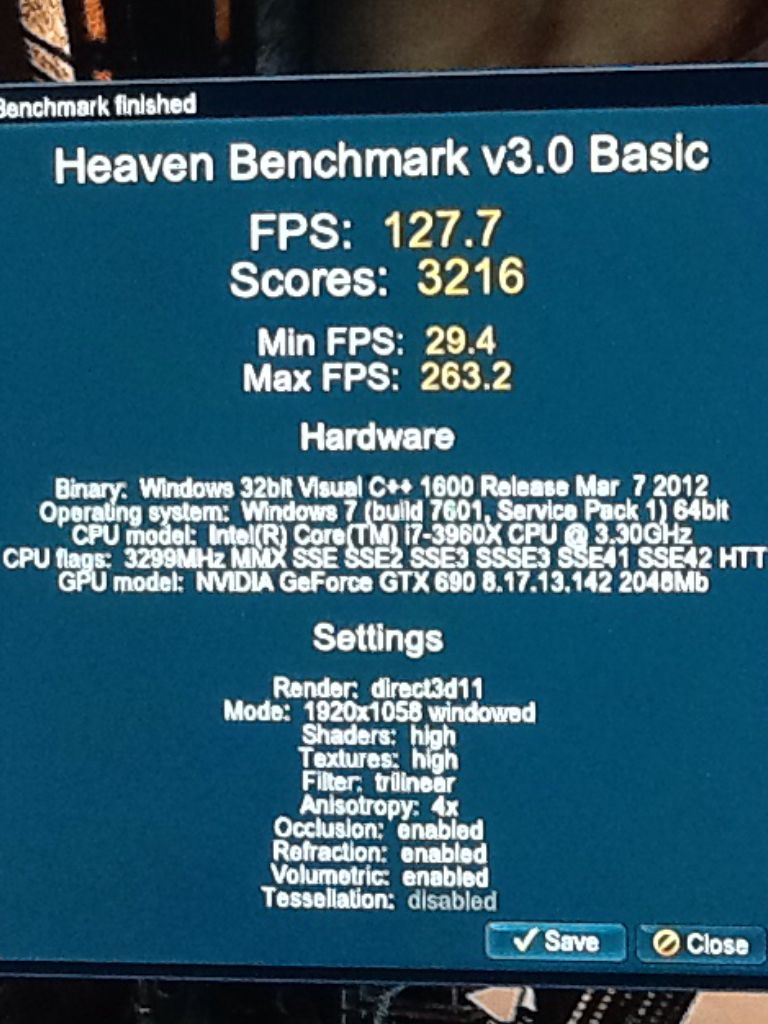

Furmark burn in results. I also looped Uningine Heaven maxxed out for nearly an hour. Only a couple of tiny artifacts every 8-10 loops, and that's way more stress than it'll ever see in an actual game.

Furmark burn in results. I also looped Uningine Heaven maxxed out for nearly an hour. Only a couple of tiny artifacts every 8-10 loops, and that's way more stress than it'll ever see in an actual game.  Catzilla Beta Benchmark @ 4.5 Ghz & 1300/1500 gpu core/mem. Not to sure about this benchmark though. They give much higher scores to GTX 670's & 680's, when the real world performance of the 7950 & 7970 are superior. Methinks nVidia may have greased some dev palms.

Catzilla Beta Benchmark @ 4.5 Ghz & 1300/1500 gpu core/mem. Not to sure about this benchmark though. They give much higher scores to GTX 670's & 680's, when the real world performance of the 7950 & 7970 are superior. Methinks nVidia may have greased some dev palms.  The bad news:Unfortunately, my 3570k is dud for oc'ing. Temps are pretty awesome for a single rad loop w/ a cpu & gpu on it. The loop reaches equilibrium at 67° C under full load even with the cpu smoking @ 1.495 Vcore. The cpu boots stable at 1.2 Vcore @ a 44 multi, 1.38 Vcore @ 45 multi, and a downright horrible 1.495 Vcore for 4.6 Ghz to be stable. It's still w/in the safe range with water cooling at 1.5 v, but I dropped it back to 4.5 Ghz to stay a bit safer. (1.55 is Ivy's max under water, 1.75 Vcore under DICE, and 1.95 Vcore under LN2/LHe3. Intel's spec sheet says 1.52v, but...). In any case, I'll just pop for the 3770k in a bit. I'm sure someone else will be happy w/ 4.5 Ghz. I otoh, want a 50 + multi, no compromises.Meh, no one wins the silicone lottery every time I guess. I struck gold on the gpu, and stepped in cr@p with the cpu.

The bad news:Unfortunately, my 3570k is dud for oc'ing. Temps are pretty awesome for a single rad loop w/ a cpu & gpu on it. The loop reaches equilibrium at 67° C under full load even with the cpu smoking @ 1.495 Vcore. The cpu boots stable at 1.2 Vcore @ a 44 multi, 1.38 Vcore @ 45 multi, and a downright horrible 1.495 Vcore for 4.6 Ghz to be stable. It's still w/in the safe range with water cooling at 1.5 v, but I dropped it back to 4.5 Ghz to stay a bit safer. (1.55 is Ivy's max under water, 1.75 Vcore under DICE, and 1.95 Vcore under LN2/LHe3. Intel's spec sheet says 1.52v, but...). In any case, I'll just pop for the 3770k in a bit. I'm sure someone else will be happy w/ 4.5 Ghz. I otoh, want a 50 + multi, no compromises.Meh, no one wins the silicone lottery every time I guess. I struck gold on the gpu, and stepped in cr@p with the cpu.

Edited by Teh Rav3n, 26 December 2012 - 08:41 PM.

Posted 26 December 2012 - 08:42 PM

Edited by Teh Rav3n, 26 December 2012 - 08:46 PM.

Posted 27 December 2012 - 08:30 AM

Posted 27 December 2012 - 01:28 PM

Edited by Teh Rav3n, 27 December 2012 - 01:39 PM.

Posted 27 December 2012 - 06:44 PM

Posted 27 December 2012 - 07:41 PM

Edited by Teh Rav3n, 27 December 2012 - 07:43 PM.

Posted 31 December 2012 - 07:48 PM

Edited by Teh Rav3n, 01 January 2013 - 12:07 AM.

Posted 01 January 2013 - 12:00 AM

Posted 16 January 2013 - 02:14 AM

0 members, 1 guests, 0 anonymous users